The concept of a "host culture"

The term "host culture" does not seem to be used much in an academic context today. One of the earliest glimmers of the use of the term is from post-WW2 sociology in referring to the conflict between immigrants and the dominant culture that they encounter in their new country. The assumption was that a "failure" to assimilate on the part of immigrants led to a racist backlash in mainstream society.https://en.wikipedia.org/wiki/Immigrant-host_model

The immigrant-host model was an approach that developed in postwar sociology to explain the new patterns of immigration and racism. It explained the racism of hosts as a reaction to the different cultural traditions of the immigrants, which acted as obstacles for their economic development and social integration.[1] The model assumed that the disruption immigration caused to stability would be solved by the cultural assimilation of immigrants into the dominant culture.This perspective is rooted in the integrationist model that underlay the civil rights movement of the 1960s, which began to disintegrate in the face of the successes and failures of that movement. Even after the enforcement of voting rights and desegregation, blacks found that old prejudiced did not change with the change in laws, and even where attitudes did change, economic conditions did not. Also, among northern whites who supported the civil rights movement when it rolled through the South, the quasi-liberal segregationist slogan of "separate but equal" became appealing when the movement took hold in their own neighborhoods. White supremacy had been squelched in the South, but white and black separatism replaced it. The South transformed and became the "New South" that relinquished explicit racial domination in favor of an unofficial policy of avoidance -- and so did the rest of the USA. Hence the 1970s renaissance of American multiculturalism. This is the current orthodoxy across the political spectrum.

Subsequently, in sociology, the integrationist immigrant-host model fell out of fashion. The perceived problem was no longer immigrants clinging to their culture and refusing to assimilate, but an unfair and exploitative situation in which immigrants were discriminated against on the pretext of their difference. The 1950s immigrant-host model was itself now perceived as yet another example of the racist "blame-the-victim" mentality of the dominant society.

Since the 1970s, however, the assumptions in this model have been increasingly discarded in the sociology of race relations. The core idea of the model that the immigrants' children would gradually assimilate and, thus, that racism and racial inequality would cease proved false. The model was criticized and blamed for reflecting and, even, reinforcing the racist assumptions by describing the cultures of immigrants as social problems and ignoring the role structural inequality plays in their subjugation.The very concept of a "host culture" seems to have disappeared from the social sciences. In fact, there is no coherent definition of a "host culture" out there anywhere. The concept of a host culture seems to have been appropriated by the travel industry, and is no longer used an academic world that would at least take pains to define its terms. The typical travel industry website makes a distinction between tourists or "guests", on the one hand, and the "host culture" of the tourist destination, on the other hand. The host culture is the mainstream culture of those destinations. Notably, the host culture is not the culture of indigenous people in places like Australia or Brazil, but rather the mainstream, dominant culture of those countries.

The "host culture" in Hawaii

The one exception to this trend is found on the Travel Weekly website, where the author distinguishes between the "host culture" and the "local culture". He takes Hawaii as his example.https://www.travelweekly.com/Arnie-Weissmann/Host-culture-local-culture

One consequence of globalization is an acceleration in the gap that's widening between host cultures and local cultures. The implications for the travel industry are significant.

Hawaii is the destination that perhaps provides the clearest distinction between a host culture and a local culture.

The Hawaiian traditions, language, customs and, above all, the aloha spirit, define its host culture. Sacred as that is, the influences of everything and everyone who have passed through or settled on the islands has made an impact on modern Hawaiian life and shaped a distinctive local culture that's quite different from the host culture.

World War II GIs can take credit, if they want, for the prevalence of Spam on local menus. And Japanese tourists' demand for familiar ingredients has helped the local cuisine evolve in, well, less processed ways.

Today, both local and host cultures are being erased by the spread of corporate mass culture -- Starbucks, McDonalds, Walmart.

In Hawaii, host culture and local culture coexist in relative harmony, in large measure because few in the local culture challenge the importance of the host culture, and the host culture's spirit is so inherently welcoming that many outside influences are not viewed as conflicting with traditional beliefs.

But elsewhere, globalization has been accused of contributing to the dilution of dominant host cultures while concurrently contributing to the homogenization of local cultures.

This part of the story is familiar: Fast-food outlets, retail stores and hotels have become brands without borders. Popular culture, from singers to movie franchises, is similarly ubiquitous.

It would seem that the world is moving toward sameness just as it's getting easier for more people to move around and explore the world's differences.As far back as the 1950s, the French historian Fernand Braudel spoke of "global civilization" as a modernizing force that was transforming the world into somewhat similar societies. There is some irony in the French fear of globalization -- in particular, the spread of American corporate mass culture -- as a threat to the French sense of distinctiveness. Historically, it was other countries -- notably, German-speaking lands -- that chafed under the universal appeal of a French culture that aggressively broadcast itself throughout Europe and the world. Paralleling the split between Protestantism and Catholicism, Germans distinguished between "Kultur" as distinctive inward spiritual values that were under threat from the outward, material aspects of "civilization", such as political and legal systems, economies, technology, infrastructure, even rationality and science. (Adam Kuper's 1999 book "Culture: The anthropologist's account".)

Historically, the role of mass culture is downplayed in normative debates on how immigrants should behave in relation to society. In the USA, three models vied for legitimacy:

- Anglo-dominant assimilation, in which immigrants gratefully conform -- at least outwardly -- to the sociocultural norms of the society that has admitted them;

- fusion and synthesis of various cultures, from which a "New American" culture would be born; and

- multiculturalism, in which subcultures maintain their distinct identity.

These normative debates on how people should act might fail to notice the descriptive realities of how ethnic groups actually do act. The reality of how groups behave turns out to be a mix of all three models: ethnic groups simultaneously conform, create a new cultures and also remain distinct. Moreover, there is a huge element of "global civilization" and "mass culture" in ethnic cultures distinct from those three models. Making things even more complicated, "global civilization" is comprised to a remarkable extent of creative, even subversive, proudly local popular cultures of lower class minorities -- for example, the rap music of urban America -- that has been packaged and sold by multinational corporations that are usually associated with bland, generic "mass culture".

But back to the distinction between "host cultures" and "local cultures" made above by the travel writer. Usually the host culture is associated with the majority population ("British culture"), and local cultures refer to particular subsets of that population (rural Yorkshire). The typical use of these terms when applied to Hawaii, however, reverses this definition -- the local culture is associated with the majority, and the host culture is associated with Native Hawaiians who are a subset of that local population. Logically, Native Hawaiians should be considered a "native" element within the local culture, and this local culture would properly be considered the host culture of Hawaii.

However ... Hawaii might be a special case. As the travel writer explained, there is a sense that the Native Hawaiian culture exerted and still exerts a formative influence on the locality. Outside of the cultural influence of Native Hawaiians on contemporary Hawaii, in the legal-political institutions one finds early influences, such as the very strong powers granted to Hawaii's governor (as opposed to Texas, where the governor has more limited power) down to simple government functions (in Hawaii, the deed to a house is kept not by the owner but by two separate State bureaucracies, an arrangement dating back to the Kingdom). This is to say that Hawaii's local population is to some extent still operating within the a certain framework that is a holdover from a host society that was predominantly Native Hawaiian. Economically, Native Hawaiians might have lost ground in relation to the rest of Hawaii's population, but they -- as did their ancestors -- still exert an outsized cultural influence on the local population.

The concept of "cultural hegemony"

This confounds the notion of "cultural hegemony", usually understood as the upper classes imposing their values, attitudes and perspectives onto the general population. At one time in Hawaii, the Hawaiian upper classes did do just that, but that culture has persisted despite the waning of the Hawaiian upper classes. As stated above, it is ordinary Hawaiians who still exert a strong cultural influence on the local population.https://en.wikipedia.org/wiki/Cultural_hegemony

In Marxist philosophy, cultural hegemony is the domination of a culturally diverse society by the ruling class who manipulate the culture of that society—the beliefs, explanations, perceptions, values, and mores—so that their imposed, ruling-class worldview becomes the accepted cultural norm; the universally valid dominant ideology, which justifies the social, political, and economic status quo as natural and inevitable, perpetual and beneficial for everyone, rather than as artificial social constructs that benefit only the ruling class.The 2011 movie "The Descendants" intimates something like this.

https://en.wikipedia.org/wiki/The_Descendants

Matthew ("Matt") King is a Honolulu-based attorney and the sole trustee of a family trust of 25,000 pristine acres on Kauai. The land has great monetary value, but is also a family legacy.By the end of the movie, Matt King rejects selling the land, arguing that his family was originally Native Hawaiian, but they married into a long series of white Americans until they became white Americans. The final scene in the movie involves multiple reconciliations.

Later, the three are at home sitting together sharing ice cream and watching television, all wrapped in the Hawaiian quilt Elizabeth had been lying in.The film rankled a critic in Hawaii, Chad Blair, because of its elitism.

https://www.civilbeat.org/2012/04/15402-descent-into-haole-the-descendants-dissed/

Things seemed promising early on in the film: shots of boxy apartment buildings, congested freeways, Diamond Head devoid of rainfall, homeless people downtown, Chinatown shoppers, squatters on beaches.

“Paradise?” says Clooney’s character. “Paradise can go fuck itself.”

I thought: Tell it like it is, Alexander Payne!

But that all changed, right about the time I learned that Clooney’s surname is King and that he’s descended from Hawaii royalty and haole aristocracy and that he lives in a beautiful house in Manoa (or Nuuanu or Tantalus; someplace like that) with a swimming pool and the kids go to Punahou and they hang out at the Outrigger Canoe Club and they own a beach and mountain on the Garden Isle.

“The Descendants” had gone from hibiscus and Gabby Pahinui to a dashboard hula doll and Laird Hamilton in just minutes, and the film kept right on descending.Blair implies that the book was written by a member of Hawaii's white elite, and that the non-white elite loved it.

On March 1, the Hawaii State Senate honored Kaui Hart Hemmings, the author of the book version of “The Descendants” and an advisor to the film. (And stepdaughter of former GOP state Sen. Fred Hemmings.)

“We are very proud of Kaui Hart Hemmings and the role she played in showcasing Hawaii in her novel. She shares the story of life in our islands through the eyes of a Kamaaina, which everyone in the world would be able to appreciate,” said Senate Majority Leader Brickwood Galuteria, who presented the certificate. That’s from a Senate press release.

“Scenery from Kauai’s iconic properties and landscapes are beautifully photographed and highlighted in the film, thanks to Kaui and the producers of the movie,” said Sen. Ron Kouchi of Kauai. “We are pleased with being able to share our island lifestyle with those who watch the movie.”

“Our island lifestyle”? More like the richest 1 percent, the ones teeing up at Princeville.The local politicians loved the movie, and maybe that in part reflects a complacent attitude toward hierarchy in Hawaii that winds through Hawaii's colonial era but was even more profound in the pre-contact era. The flip side of the Native Hawaiian legacy was authoritarianism, and one can see that strain in Hawaii's political culture, which otherwise exhibits a casual populism and egalitarianism.

One the other hand, Chad Blair applauds how a little bit of social criticism was sneaked into the movie in George Clooney's big speech, which is included in the Chad Blair's article (in big bold letters).

We didn’t do anything to own this land — it was entrusted to us. Now, we’re haole as shit and we go to private schools and clubs and we can hardly speak Pidgin let alone Hawaiian.

But we’ve got Hawaiian blood, and we’re tied to this land and our children are tied to this land.

Now, it’s a miracle that, for some bullshit reason, 150 years ago, we owned this much of paradise. But we do. And, for whatever bullshit reason, I’m the trustee now and I’m not signing.In the case of Hawaii, the term "host culture" might be understood as the culture that is secretly hegemonic amidst a mix of all sorts of ethnicities. "The Descendants" does a pretty good job of showing how among those who descend from the old white elite, there is still some sort of residue of values and attitudes that derive from Native Hawaiian culture. That might be something that people in Hawaii -- and their politicians -- could recognize when they saw the movie.

California uber alles?

A sharp contrast to "The Descendants" might be found in the 2010 comedy-drama "The Kids Are Alright".https://en.wikipedia.org/wiki/The_Kids_Are_All_Right_(film)

Nic (Annette Bening) and Jules (Julianne Moore) are a married same-sex couple living in the Los Angeles area. Nic is an obstetrician and Jules is a housewife who is starting a landscape design business. Each has given birth to a child using the same sperm donor.The kids locate the donor, Paul, who has an affair with Jules and wants to marry her and adopt the kids. This causes a crisis, but by the end of the movie, there is a reconciliation.

The next morning, the family drives Joni to college. While Nic and Jules hug Joni goodbye, they also affectionately touch each other. During the ride home, Laser tells his mothers that they should not break up because they are too old. Jules and Nic giggle, and the film ends with them smiling at each other and holding hands.The actual feel of the final scene of the movie is not as warm as a description of it might seem. Joni breaks down as she watches her family drive away because her parents may split, but also perhaps because she is now an adult and on her own. It is a very different final scene from that of "The Descendants". It's much more classically American, and all very Californian.

["The Kids Are Alright", 2010, final scene, "You're too old."]

https://www.youtube.com/watch?v=eodbWmxFgOU

Does California exert an outsized cultural influence on suburban America? And insofar that California is culturally a proverbial "postcard from the future", does California help the rest of America deal with the social changes that California has already undergone prior to other places (for example, the blended family of the "Brady Bunch")? That is, through a television industry that is very Californian, does the rest of middle-class, suburban America finds a culture that it can relate too despite the provincialism of their own surroundings? For example, the TV show "Freaks and Geeks" was set in the Midwest in the 1980s, but that region was still adjusting to the realities that had already beset more urban places like California in the 1970s -- realities like the rise of lonely latchkey children.

https://www.nytimes.com/interactive/2017/08/13/arts/freaks-and-geeks.html

A little setup: Bill Haverchuck (Martin Starr) is having a terrible time of it. He's a latchkey kid. He’s horrible in phys-ed class. And he learns, in this episode, that his mom is seriously dating his gym teacher, whom he hates.

First, the music. “I’m One” by the Who, from the 1973 album “Quadrophenia.” It builds from mournfulness (“I’m a loser / No chance to win”) to a defiant chorus. And it's a great example of how “Freaks and Geeks” chose its soundtracks. The episode is set in 1981, but it avoids on-the-nose ’80s-song choices. Paul Feig, the show’s creator, once told me that the thing about the early ’80s in the Midwest was that they were really still the ’70s.

The old switcheroo

If the dominant values can be a product of social groups that are not dominant (Native Hawaiians in Hawaii) or are not physically present in the locality (Californian influence in the Midwest, or French hegemony in traditional Europe), could one group's secret influence be replaced by that of another group?It could be that through the latter half of the 20th century, cultural hegemony was exerted in Hawaii by local ethnic Japanese who disproportionately made up the schoolteachers and politicians in this period (in fact, a potential majority).

https://en.wikipedia.org/wiki/Japanese_in_Hawaii

The Japanese in Hawaii (simply Japanese or “Local Japanese”, rarely Kepanī) are the second largest ethnic group in Hawaii. At their height in 1920, they constituted 43% of Hawaii's population.[2] They now number about 16.7% of the islands' population, according to the 2000 U.S. Census. The U.S. Census categorizes mixed-race individuals separately, so the proportion of people with some Japanese ancestry is likely much larger.During elections in Hawaii, politicians are constantly talking about the welfare of "keiki" and the "kupuna". Hawaiian language is being utilized, but the target audiences are Asians in general and the coveted Japanese voting block in particular. The image and identity of Hawaii might be located in a Polynesian past, but during the second half of the 20th century this masked the hegemony of Asian American values and attitudes. Similarly, Japanese American soldiers in WW2 supposedly suffered the highest level of fatalities in that war among American soldiers, and the entrenched rhetoric of patriotic self-sacrifice that one still encounters in Hawaii among the local Japanese ironically serves as a vehicle for perpetuating culturally conservative Asian values under the guise of Americanization.

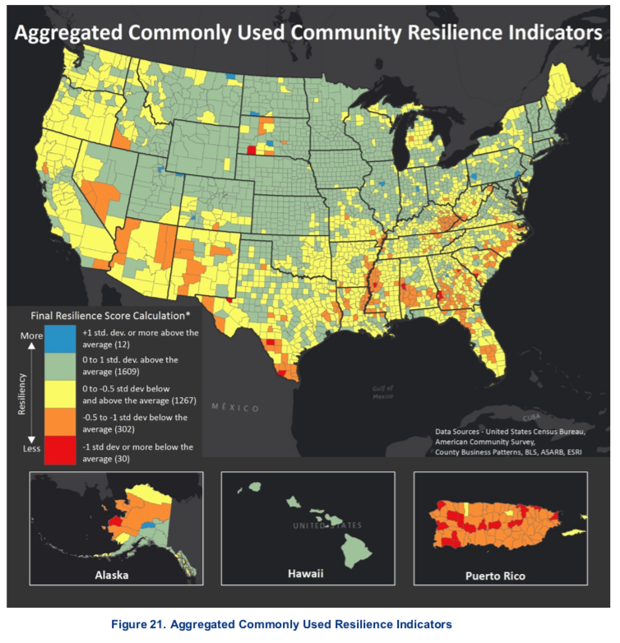

Asian cultural hegemony in Hawaii might have been crucial in terms of accelerating Hawaii's economic development in the second half of the 20th century. As it became clear in the 2016 American presidential election, there is in places like rural West Virginia a segment of the population that identifies with family, community, tradition, religion and hard physical labor, and it resists urbanization and formal education. In Hawaii, the Asian and especially the Japanese population identify with those culturally conservative values, but they also put a premium on professional orientation and technical education, and this serves as a crucial bridge into the future. Without this cultural bridge between the rural community-oriented working class and the individualistic middle-class suburbs, there might not have been that much different between the economic fortunes of Hawaii and a place like Puerto Rico. Moreover, the idea that you can "Have it both ways" in Hawaii means that almost anyone can live in Hawaii and in their own little niche enjoy a very high quality of life (if they can afford it).

That was then and this is now

That being said, the 21st century might be another matter entirely. Cultural hegemony in Hawaii might have shifted yet again, this time toward mainstream American culture.For example, the orientation of children of Asian immigrants in Hawaii today seems quite different from the past. In the past, Asian immigrants in Hawaii would typically assimilate into local culture. Today, they assimilate just as much into American culture. For example, there is a local literature in Hawaii that stresses the dysfunctional continuities with the past. This contrasts with an Asian American literature in the continental USA that stresses the profound chasms between the generations that signify not just a generation gap, but thousands of years of difference ("The Joy Luck Club"). Today, however, the young writers in Hawaii who are the educated offspring of immigrants engage in a form of literature that is really typically Asian American. The torch has passed to a new generation.