Disruptive innovation can be found in the history of men’s formal wear and of language.

In fact, it’s happening right now.

Comfortable formal wear has become popular with the rise of video-conferencing from home.

In fact, a Japanese company is now selling a line of clothing that mimics the appearance of business suits but provides the comfort of sleepwear.

It’s a pajama suit.

https://www.theverge.com/tldr/2020/12/14/22174641/pajama-suit-jacket-zoom-meeting-comfort-aoki

If you’ve been showing up to work Zoom meetings in your pajamas and feel kind of weird about it, Japanese clothing retailer Aoki has a solution for you: a piece of clothing that looks like a classic suit jacket but feels like PJs. It’s calling it the Pajamas Suit, and it really does look like a suit jacket — complete with the cut and the buttons on both the sleeves and the front.

To some extent, comfortable business wear has always been available to those few who can afford it and have the power to get away with it.

Typically, the material that business suits are made of is heavy and tends not to wrinkle.

But the men at the top of the corporate totem pole often wear very expensive suits that are thin and wrinkle easily.

https://www.newyorker.com/magazine/2004/09/27/fit-for-the-white-house

Nobody is going to complain to them about their wrinkled suits.

Aside from the greater coolness and comfort, that might be part of the appeal of wearing those thin suits.

The wrinkly suit would be an assertion of power and status.

For example, when American men first began to wear earrings in the 1980s, it was thugs who did so.

Likewise, in Japan, members of motorcycle gangs often wear women’s sandals.

Wearing women’s accessories in these cases is literally an assertion that the wearer cannot be challenged for comically violating social norms.

Are pajama suits here to stay?

Are pajama suits going to replace contemporary formal wear?

More specifically, are pajama suits a form a disruptive innovation?

Disruptive innovation is when a entirely distinct fringe market comprised of inferior products finds a niche, improves over time, and ends up displacing the dominant market.

https://en.wikipedia.org/wiki/Disruptive_innovation

In business theory, a disruptive innovation is an innovation that creates a new market and value network and eventually disrupts an existing market and value network, displacing established market-leading firms, products, and alliances.

The modern history of Western men’s formal wear is a history of disruptive innovation.

Originally, men in western Europe wore the doublet, which was of Spanish origin.

It developed from the padded garments worn under armor, such as the gambeson, aketon, and arming doublet.

Like many other originally practical items in the history of men’s wear, from the late 15th century onward, the doublet became elaborate enough to be seen on its own.

https://en.wikipedia.org/wiki/Doublet_(clothing)

A doublet (derived from the Ital.giubbetta[1]) is a man’s snug-fitting jacket that is shaped and fitted to the man’s body which was worn in Spain and was spread to Western Europe from the late Middle Ages up to the mid-17th century. The doublet was hip length or waist length and worn over the shirt or drawers. Until the end of the 15th century, the doublet was usually worn under another layer of clothing such as a gown, mantle, overtunic or jerkin when in public.

Again, the doublet originated as padding that underlay armor, and so it was once quite simple.

Until the end of the 15th century, the doublet was usually worn under another layer of clothing such as a gown, mantle, overtunic or jerkin when in public.

https://en.wikipedia.org/wiki/Jerkin

The jerkin was originally a simple garment.

https://en.wikipedia.org/wiki/Buff_coat

Eventually, the doublet was replaced by the suit.

https://en.wikipedia.org/wiki/History_of_suits

The man’s suit of clothes, in the sense of a lounge or business or office suit, is a set of garments which are crafted from the same cloth. This article discusses the history of the lounge suit, often called a business suit when made in dark colors and of conservative cut.

In England, the suit finds its origins in a royal decree of the 17th century.

The modern lounge suit appeared in the late 19th century, but traces its origins to the simplified, sartorial standard of dress established by the English king Charles II in the 17th century. In 1666, the restored monarch, Charles II, per the example of King Louis XIV‘s court at Versailles, decreed that in the English Court men would wear a long coat, a waistcoat (then called a “petticoat“), a cravat (a precursor of the necktie), a wig, knee breeches (trousers), and a hat. However, the paintings of Jan Steen, Pieter Bruegel the Elder, and other painters of the Dutch Golden Era reveal that such an arrangement was already used informally in Holland, if not Western Europe as a whole. Many of Steen’s genre paintings include men dressed in hip-length or frock coats with shirt and trousers, which in fact more closely resemble modern suit designs than the contemporary British standard.

The royal decree was part of a larger context of the shift toward standardized clothing in early modern Europe.

The justacorp in particular became the new European standard.

https://en.wikipedia.org/wiki/Formal_wear

Clothing norms and fashions fluctuated regionally in the Middle Ages.

More widespread conventions emerged around royal courts in Europe in the more interconnected Early Modern era. The justacorps with cravat, breeches and tricorne hat was established as the first suit (in an anarchaic sense) by the 1660s-1790s. It was sometimes distinguished by day and evening wear.

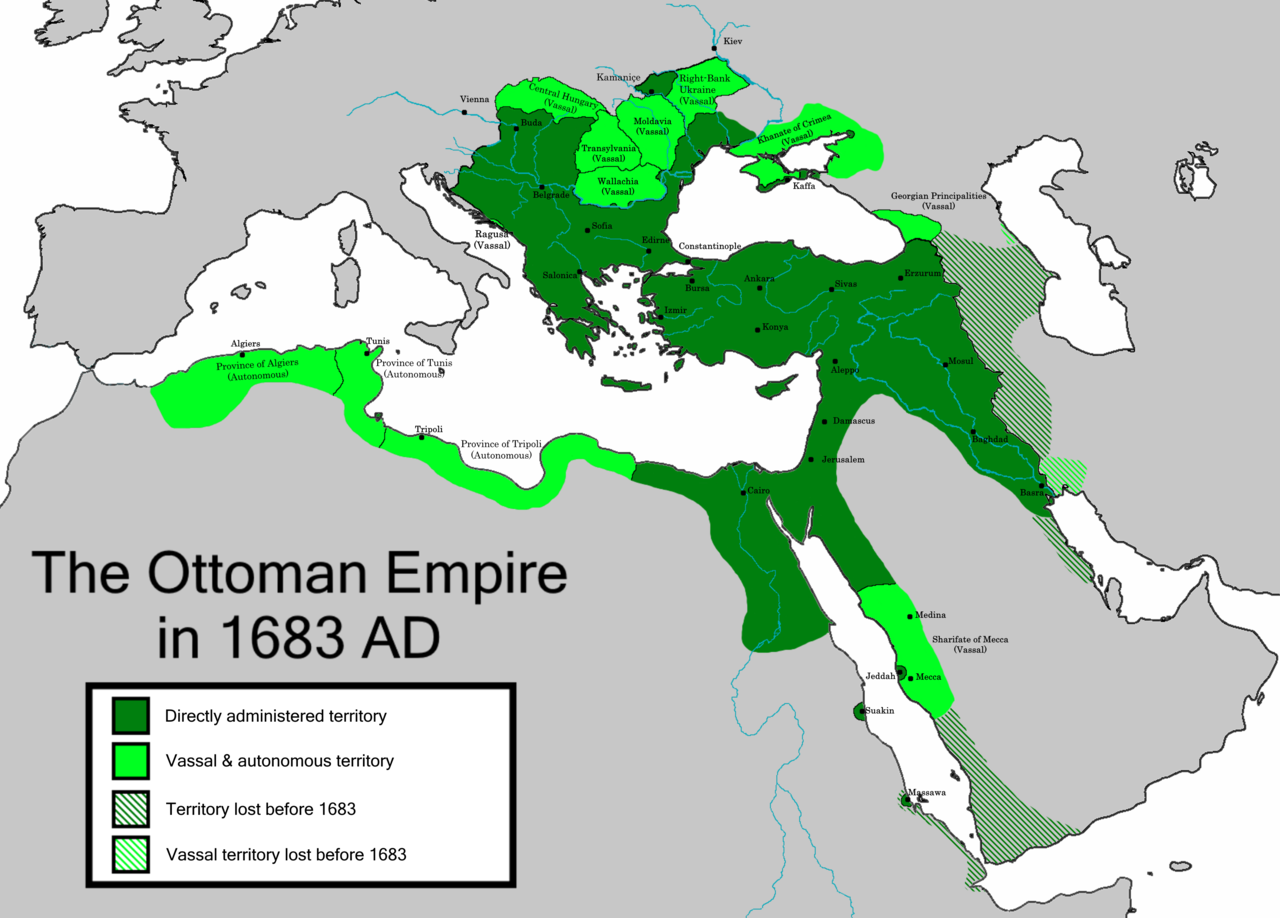

The justacorp seems to find its origins in Asiatic clothing brought back by wealthy travelers, often from eastern Europe.

In eastern Europe, Asian styles were already established.

https://en.wikipedia.org/wiki/Ottoman_Empire

Similar coats known as achkans and sherwanis had been worn in India for centuries, and could be either sleeved or sleeveless. These were often worn by wealthy travellers who visited the East during the early 1600s, and some may have been brought back to England.[4][5] Another garment that came into fashion in Poland and Hungary at the same time was the zupan or dolman with its distinctive turn-back cuffs and decorative gold braid. The zupan started out as a long and heavy winter gown[6] before becoming shorter and more fitted during the 16th century.[7][8] These Central European and Indian long coats probably influenced the design of the justacorps later favored by Louis XIV of France[9] and King Charles due to their exotic appearance, comfort and practicality.

Thus, the modern business suit finds its origins in pajamas.

IIRC, going back even further in history, some Indian styles of clothing might originate from western China.

The real origin is nomads from the north.

https://en.wikipedia.org/wiki/Trousers

The oldest known trousers were found at the Yanghai cemetery in Turpan, Xinjiang, western China and dated to the period between the 10th and the 13th centuries BC. Made of wool, the trousers had straight legs and wide crotches and were likely made for horseback riding.

Trousers in particular found their origin in the dress of the nomads of the Eurasian steppe.

Notably, trousers were worn by nomadic women, who often participated in warfare (as archers).

Trousers enter recorded history in the 6th century BC, on the rock carvings and artworks of Persepolis, and with the appearance of horse-riding Eurasian nomads in Greek ethnography. At this time, Iranian peoples such as Scythians, Sarmatians, Sogdians and Bactrians among others, along with Armenians and Eastern and Central Asian peoples such as the Xiongnu/Hunnu, are known to have worn trousers. Trousers are believed to have been worn by both sexes among these early users.

Associating trousers with nomads and women, Greeks and Romans disdained trousers as barbaric and feminine.

The ancient Greeks used the term “ἀναξυρίδες” (anaxyrides) for the trousers worn by Eastern nations and “σαράβαρα” (sarabara) for the loose trousers worn by the Scythians. However, they did not wear trousers since they thought them ridiculous, using the word “θύλακοι” (thulakoi), pl. of “θύλακος” (thulakos), “sack”, as a slang term for the loose trousers of Persians and other Middle Easterners.

Republican Rome viewed the draped clothing of Greek and Minoan (Cretan) culture as an emblem of civilisation and disdained trousers as the mark of barbarians.

The button-down coat or shirt worn in south Asia likewise seems to originate from northern nomads.

https://en.wikipedia.org/wiki/Achkan

Achkan (Hindi: अचकन) also known as Baghal bandi is a knee length jacket worn by men in the Indian subcontinent much like the Angarkha.

Greeks mention achkan and gown like dresses worn by men and women all the way during mauryan period. The earliest iconographic evidence for such a dress appears in sculptures and rock relief in Iran as well as paintings from Parthian Empire. The earliest iconographic evidence in India is from Kushan Empire which was founded by Central Asian nomadic tribe called Yeuzhi by the Chinese. However, since the Yeuzhi Kushans adopted many aspects of their culture from Greco-Parthian Kingdom, therefore its plausible that this dress is the legacy of Parthian culture.

In western Europe, the evolution of the suit did not cease after the 17th century.

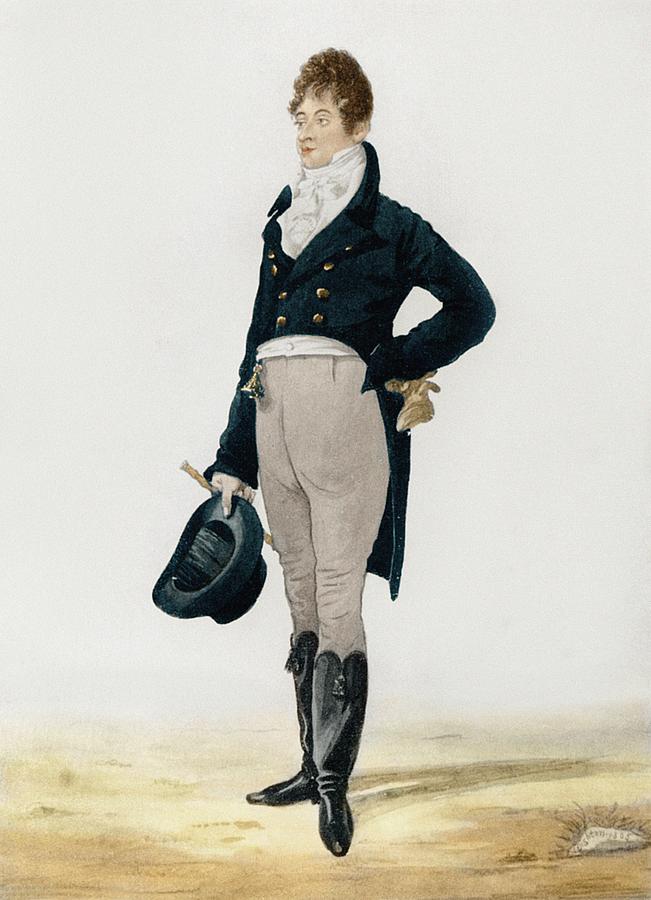

The early 19th century, there was a turn toward further simplicity and utility in upper-class men’s wear.

Regency

In the early 19th century, British dandy Beau Brummell redefined, adapted, and popularized the style of the British court, leading European men to wearing well-cut, tailored clothes, adorned with carefully knotted neckties. The simplicity of the new clothes and their somber colors contrasted strongly with the extravagant, foppish styles just before. Brummell’s influence introduced the modern era of men’s clothing which now includes the modern suit and necktie. Moreover, he introduced a whole new era of grooming and style, including regular (daily) bathing as part of a man’s toilette. However, paintings of French men from 1794 onwards reveal that Brummel might only have adopted and popularized post-revolutionary French suits, which included tail coat, double-breasted waistcoat and full-length trousers with either Hessian boots or regular-size shoes. There is no 18th-century painting of Brummel to establish his innovation. The modern suit design seems to be inspired by the utilitarian dressing of hunters and military officers.

Later, the long, simple frock coat became popular.

The frock coat was replaced by the morning coat which (upper-class) men could wear during their morning exercise of horseback riding.

Victorian

Towards the start of the Victorian period, the frock coat, initially not just black, became popular, and quickly became the standard daily clothing for gentlemen. From the middle of the 19th century, a new (then informal) coat, the morning coat, became acceptable. It was a less formal garment, with a cut away front, making it suitable for wearing while riding.

At the same time, the dinner jacket or tuxedo emerged as a casual alternative to heavy formal wear.

This was the beginnings of the “dress lounge” — what is now known as the business suit.

Parallel to this, the dinner jacket was invented and came to be worn for informal evening events. It was descended from white tie (the dress code associated with the evening tailcoat) but quickly became a full new garment, the dinner jacket, with a new dress code, initially known as ‘dress lounge’ and later black tie. When it was imported to the United States, it became known as the tuxedo. The ‘dress lounge‘ was originally worn only for small private gatherings and white tie (‘White tie and tails’) was still worn for large formal events. The ‘dress lounge’ slowly became more popular for larger events as an alternative to full evening dress in white tie.

In the early 20th century, both the morning coat and the lounge suit (tuxedo) moved from casual wear into the mainstream of formal wear.

Edwardian

The beginning of the Edwardian era in the early 20th century brought a steady decline in the wearing of frock coats as the morning coat rose in relative formality, first becoming acceptable for businessmen, then becoming standard dress even in town. The lounge suit was slowly accepted as being correct outside its original settings, and during Edwardian times gradually began to be seen in town. While still reserved for private gatherings, usually with no ladies, black tie became more common.

Interwar

After the end of the first World War, most men adopted the short lounge coated suit. Long coats quickly went out of fashion for everyday wear and business, and the morning coat gained its current classification of “formal”. During the 1920s, short suits were always worn except on formal occasions in the daytime, when a morning coat would be worn. Older, more conservative men continued to wear a frock coat, or “Prince Albert coat” as it was known.

In the roaring 1920s, the new formal wear began to complexify.

In the 1920s men began wearing wide, straight-legged trousers with their suits. These trousers normally measured 23 inches around the cuff. Younger men often wore even wider-legged trousers which were known as “Oxford bags.” Trousers also began to be worn cuffed shortly after World War I and this style persisted until World War II. Trousers first began to be worn creased in the 1920s. Trousers were worn very highly waisted throughout the 1920s and this fashion remained in vogue until the 1940s. Single-breasted suits were in style throughout the 1920s and the double-breasted suit was mainly worn by older more conservative men. In the 1920s, very fashionable men would often wear double-breasted waistcoats (with four buttons on each side) with single-breasted coats. Lapels on single-breasted suits were fashionably worn peaked and were often wide. In the early 1930s these styles continued and were often even further exaggerated.

But there was also a move toward greater simplicity of design following WW2.

Post-war

Reflecting the democratization of wealth and larger trend toward simplification in the decades following the Second World War, the suit was standardized and streamlined. Suit coats were cut as straight as possible without any indication of a waistline, and by the 1960s the lapel had become narrower than at any time prior. Cloth rationing during the war had forced significant changes in style, contributing to a large reduction in the popularity of cuts such as the double-breasted suit.

The 1970s saw a complexification of the business suit under the influence of the discotheque.

In the 1970s, a snug-fitting suit coat became popular once again, also encouraging the return of the waistcoat. This new three-piece style became closely associated with disco culture, and was specifically popularized by the film Saturday Night Fever.

In the 1980s, the business suit once again simplified.

The jacket became looser and the waistcoat was completely dispensed with. A few suit makers continued to make waistcoats, but these tended to be cut low and often had only four buttons. The waistline on the suit coat moved down again in the 1980s to a position well below the waist. By 1985-1986, three-piece suits were on the way out and making way for cut double-breasted and two-piece single-breasted suits.

It is interesting that the business suit complexified in the aftermath of disillusioning wars, namely, WW1 and the Vietnam War.

The 1920s and the 1970s were both periods of hedonistic escapism.

To summarize:

- Men’s formal wear since the Middle Ages was based on the padding that lay under armor.

- (A) Over time, this clothing became elaborate and ornate.

- (1) There was a dramatic change in western European men’s formal wear in the 1600s.

- Inspired by Asian dress, the new formal wear was more practical and comfortable.

- A uniform dress code seems to have been the motive for the monarchs of England and France to mandate the new men’s wear.

- (B) Over time, this clothing became elaborate and ornate.

- (2) In the 19th century, a new style of men’s formal wear emerged, based on the utilitarian dress of hunters and military officers.

- (3) Later, simple long “frock coats” also became popular.

- (4)In their turn, these long coats were cast aside in favor of short “morning coats” better suited for riding horses.

- (5) At the same time, the dinner jacket a.k.a. tuxedo a.k.a. “dress lounge” emerged as a alternative to heavy formal wear.

- This lounge suit became the business suit.

- (C) It became more elaborate in the 1920s and 1970s.

- (*)It was streamlined in the post-WW2 era and in the 1980s.

One can see above that there are periods in which

- a simpler, more useful form of men’s clothing finds a niche (examples 1, 2, 3, 4, 5);

- it becomes more elaborate and refined over time (examples A, B, C); and finally

- it becomes established as the new formal wear.

That sounds a lot like disruptive innovation.

https://en.wikipedia.org/wiki/Disruptive_innovation

An innovation that creates a new market by providing a different set of values, which ultimately (and unexpectedly) overtakes an existing market (e.g., the lower-priced, affordable Ford Model T, which displaced horse-drawn carriages)

Airbnb is classic disruptive innovation.

There was always a niche market for tourists who preferred the home-sharing experience over generic hotel stays.

Today, however, business travelers who typically prefer hotels have been won over by the improved professionalism of home sharing.

In contrast to disruptive innovation, “sustaining innovation” does not significantly impact existing markets.

It may be either:

- Evolutionary. An innovation that improves a product in an existing market in ways that customers are expecting (e.g., fuel injection for gasoline engines, which displaced carburetors.)

- Revolutionary (discontinuous, radical). An innovation that is unexpected, but nevertheless does not affect existing markets (e.g., the first automobiles in the late 19th century, which were expensive luxury items, and as such very few were sold)

For example, the invention of the automobile was in itself revolutionary.

However, the automobile was initially not disruptive to transportation markets (horses and trains) because only wealthy people could afford to buy cars.

It was the mass production of cars by Ford Motor Company that was disruptive.

Likewise, Uber began as a luxury “black car” taxi service that innovated by utilizing an app.

Thus, Uber was initially an evolutionary sustaining innovation that made limousine service more convenient.

However, Uber eventually recruited ordinary people to drive their own cars.

Uber therefore represents the mass production of cheap taxis that is comparable to Ford’s mass production of cars.

That is disruptive innovation.

Uber’s innovation was thus double:

- creating an app for exclusive limo service (sustaining innovation), and later

- recruiting freelancers who would create a whole new market of customers who would have otherwise never used taxis (disruptive innovation).

This resolves the apparent paradox of:

- Uber’s upscale origins in the dominant market rather than a fringe market and

- Uber’s eventual disruptive effect on the taxi business.

https://hbr.org/2015/12/what-is-disruptive-innovation

Uber and the automobile were born as forms of sustaining innovation, but mass production rendered them forms of disruptive innovation.

Conversely, what originated as disruptive innovation can later engage in sustaining innovation.

The long history of the business suit is a series of disruptive innovations (1-5).

Once established, the styles of suit would be improved upon (A-C).

Most recently, in the 1920s and 1970s, the business suit — now the dominant formal wear — was subject to sustaining innovation and became more elaborate and refined.

The sustaining innovations of the 1920s became established in suit design, whereas the innovations of the 1970s were rolled back during the conservative 1980s.

There might currently be new forms of men’s wear moving from the fringes of work and leisure into formal wear.

What might be the next big disruptive innovation in men’s wear in the future?

The proliferation of personal technology poses one challenge in modern life.

Also, air travel limits what one can bring onto a plane to one carry-on bag and a single personal item.

The solution to both problems might be the adoption of the utility vest.

https://www.duluthtrading.com/dw/image/v2/BBNM_PRD/on/demandware.static/-/Sites-dtc-master-catalog/default/dw2f0dce42/images/large/81805_DEV.jpg

The utility vest would evolve to become more elegant and perhaps become the dominant form of formal wear.

The frock coat would be reborn to store electronics.

https://www.scottevest.com/photos/product/wtrch/xray-two-prw.jpg

In sum, the history of men’s formal wear alternates between displacement and elaboration.

A similar process might be at work in the evolution of language.

In “The Unfolding of Language”, Guy Deutscher describes how language tends toward efficiency, simplification and laziness.

For example, the Latin term hoc die (“this day”) degenerated over time into hodie in Latin and later into hui in Old French.

https://www.theguardian.com/books/2005/jul/02/featuresreviews.guardianreview13

Case endings, for instance, may be lost because of the tendency of speakers to follow an “economy” or “least effort” principle, lopping off final syllables or pronouncing them so indistinctly as to obscure whatever grammatical information they contain. In saving themselves the effort of pronouncing their endings clearly, speakers effectively destroy the case system. But as Deutscher explains, it was the same kind of laziness that created case endings in the first place. These endings were originally separate words, called postpositions (like prepositions, but placed after rather than before nouns). Speakers following the “least effort” principle simply fused postpositions with their preceding nouns, reducing two words (like “house to”) to one (“house [dative]”).

But a reverse process of complexification is also at work in the evolution of languages.

For example, in France, hui later recomplexified into aujourd’hui (“on the day of today”).

Historically and sociologically, the evolution of the aujourd’hui seems to correspond with the long reconstruction of western Europe after the collapse of the Roman empire.

Aujourd’hui emerged as a phrase in the early modern period when courtly etiquette was replacing brute military might as a source of power and respect.

In fact, the term is currently becoming even more complexified into the phrase au jour d’aujourd’- hui.

But just as “hui” seemed insufficiently expressive to their ancestors, who added “au jour de”, so present-day French speakers, unaware of its history, have become dissatisfied with the fused form “aujourd’hui”. In colloquial speech people have started saying “au jour d’aujourd’- hui” – “on the day of on the day of this day”.

That kind of embellishment and ornamentation of a phrase might be an example of sustaining innovation in language.

But how would one explain the shrinkage of a term?

Disruptive innovation would not describe the shrinkage of a term because the new term:

- is not from an entirely distinct set of words;

- does not improve (complexify) over time; and

- does not radically sweep away the tradition of which it is, in fact, a remnant.

Again, hui was from Old French, spoken in northern France from the 11th to the 14th centuries.

Perhaps this shrunken form of the terms hoc die and later hodie can be taken as an example of “efficiency innovation”.

https://www.inc.com/christine-lagorio/clayton-christensen-capitalist-dilemma.html

The third type are “efficiency” innovations. These reduce the cost of making and distributing existing products and services–like Toyota’s just-in-time manufacturing in carmaking and Geico in online insurance underwriting. Efficiency innovations almost always reduce the net number of jobs in an industry, allow the same amount of work (or more) to get done using fewer people. Efficiency innovations also emancipate capital for other uses. Without them, much of an economy’s capital is held captive on balance sheets, tied up in inventory, working capital, and balance-sheet reserves.

Efficiency innovation might likewise explain the simplification of men’s formal wear during conservative periods, such as the 1950s and the 1980s (* above).

The Japanese pajama suit is an example of efficiency innovation insofar as it is a radical simplification of a business suit.

However, as an example of sleepwear that has been upgraded, the pajama suit is an example of sustaining innovation.

If the pajama suit catches on and replaces contemporary formal wear, then it would represent disruptive innovation.

Perhaps language innovation follows a similar pattern, when words are:

- borrowed from other fields or languages to express something when current words fail (disruptive innovation);

- downsized or eliminated altogether (efficiency innovation), such as the evolution from hoc die to hodie to hui; or

- elaborated upon over time (sustaining innovation), such as the development of aujourd’hui from hui;

A newly invented word is known as a “neologism”.

https://en.wikipedia.org/wiki/Neologism

A neologism (/niːˈɒlədʒɪzəm/; from Greek νέο- néo-, “new” and λόγος lógos, “speech, utterance”) is a relatively recent or isolated term, word, or phrase that may be in the process of entering common use, but that has not yet been fully accepted into mainstream language.

Popular examples of neologisms can be found in science, fiction (notably science fiction), films and television, branding, literature, jargon, cant, linguistic and popular culture.

Examples include laser (1960) from Light Amplification by Stimulated Emission of Radiation; robotics (1941) from Czech writer Karel Čapek‘s play R.U.R. (Rossum’s Universal Robots)[6]; and agitprop (1930) (a portmanteau of “agitation” and “propaganda”).

In all of these examples given of neologisms, the words in question derive from a field on the margins of the mainstream.

The new words became established in those fringe fields and then moved into the mainstream, sometimes rapidly becoming established.

This is disruptive innovation.

The concept of disruptive innovation can also be applied to the adoption of foreign terms in loanwords and calques.

https://en.wikipedia.org/wiki/Loanword

A loanword (also loan word or loan-word) is a word adopted from one language (the donor language) and incorporated into another language without translation.

Examples of loanwords in the English language include café (from French café, which literally means “coffee”), bazaar (from Persian bāzār, which means “market”), and kindergarten (from German Kindergarten, which literally means “children’s garden”).

Sometimes, people cannot find the words to describe something.

Sometimes, they turn to another language in which the concept is highly developed.

There is a phrase that English speakers use — if only facetiously — for some special quality that cannot be described or expressed.

Notably, it comes from directly from the French:

Je ne se quoi.

Who are the great innovators of language?

Is it poets and writers like Shakespeare?

Not really, it seems.

There is the romantic notion of the tragic, god-like poet creating and introducing new words into language.

That is a bit like the tail wagging the dog.

https://www.pri.org/stories/2013-08-19/did-william-shakespeare-really-invent-all-those-words

Linguistic innovation is vastly more democratic.

In fact, one might say that most linguistic innovation comes from a fringe within the mainstream.

Innovation in language overwhelmingly originates from teenage girls.

[F]emale teen linguists should be lauded for their longtime innovation — they’ve been shaking things up for centuries.

[F]emale teenagers are actually “language disruptors” — innovators who invent new words that make their way into the vernacular. “To use a modern metaphor, young women are the Uber of language,” she writes.

Women are consistently responsible for about 90 percent of linguistic changes today, writes McCulloch. Why do women lead the way with language? Linguists aren’t really sure. Women may have greater social awareness, bigger social networks or even a neurobiological leg up. There are some clues to why men lag behind: A 2009 study estimated that when it comes to changing language patterns, men trail by about a generation.

The strange new words and phrases introduced by female adolescent humans become established over time and come to seem normal and boring a generation later.

(“Clueless”, 1995, trailer)

Why are adolescent female humans so innovative in their use of language?

It might be for the same reason that humans wear clothes in the first place.

After all, “Clueless” is a movie about clothes as much as it is about southern California slang.

And why do humans wear clothes?

The answer is in the Bible.

More specifically, in the book of Genesis.

The first woman and the first man ate the fruit of the Tree of Knowledge of Good and Evil.

At that moment, they immediately figured out that they were naked.

Genesis 3

6 When the woman saw that the fruit of the tree was good for food and pleasing to the eye, and also desirable for gaining wisdom, she took some and ate it. She also gave some to her husband, who was with her, and he ate it. 7 Then the eyes of both of them were opened, and they realized they were naked; so they sewed fig leaves together and made coverings for themselves.

The history of clothing reflects this human self-consciousness.

In the beginning, new forms of clothing are adopted because they are more practical and comfortable.

But over time, these forms of clothing, initially so utilitarian, become increasingly refined and elaborate.

The first human clothing might have been ochre.

https://en.wikipedia.org/wiki/Ochre

Ochre (/ˈoʊkər/ OH-kər; from Ancient Greek: ὤχρα, from ὠχρός, ōkhrós, pale) or ocher (minor variant in American English) is a natural clay earth pigment which is a mixture of ferric oxide and varying amounts of clay and sand.

Ochre is very useful.

Ochre has uses other than as paint: “tribal peoples alive today . . . use either as a way to treat animal skins or else as an insect repellent, to staunch bleeding, or as protection from the sun. Ochre may have been the first medicament.”

It also has powerful symbolic meaning for the cultures that used it.

According to some scholars, Neolithic burials used red ochre pigments symbolically, either to represent a return to the earth or possibly as a form of ritual rebirth, in which the colour symbolises blood and the Great Goddess.

It seems that the human use of ochre can be traced back as far as 200,000 years ago, the very dawn of the human species.

Red ochre has been used as a colouring agent in Africa for over 200,000 years.[29] Women of the Himba ethnic group in Namibia use a mix of ochre and animal fat for body decoration, to achieve a reddish skin colour. The ochre mixture is also applied to their hair after braiding.[30] Men and women of the Maasai people in Kenya and Tanzania have also used ochre in the same way.

At one tie, humans seems to coat everything in ochre.

The use of ochre is particularly intensive: it is not unusual to find a layer of the cave floor impregnated with a purplish red to a depth of eight inches. The size of these ochre deposits raises a problem not yet solved. The colouring is so intense that practically all the loose ground seems to consist of ochre. One can imagine that the Aurignacians regularly painted their bodies red, dyed their animal skins, coated their weapons, and sprinkled the ground of their dwellings, and that a paste of ochre was used for decorative purposes in every phase of their domestic life. We must assume no less, if we are to account for the veritable mines of ochre on which some of them lived…

The widespread use of ochre does not seem to be purely practical, or even mostly practical.

Humans seem to have an extraordinary level of self-consciousness, and ochre served as clothing.

This puts the evolution of formal wear — and architecture, which is closely tied to clothing, as well as other art forms — within a certain pattern.

New styles of clothing emerge because they are much more practical compared to conventional wear, but self-consciousness pushes them toward greater ornamentation.

There are thus three periods in a society’s history in the evolution of formal wear:

- times when society is open to adopting entirely new manners of dress for pragmatic reasons of function and convenience — quite un-self-consciously;

- periods when the pragmatic new manner of is increasingly ornamented — very self-consciously;

- conservative periods when styles are streamlined because they have become much too outlandish — again, very self-consciously.

Beau Brummel is unique here because his sartorial innovations were functional (the dinner jacket as all-purpose formal wear, ornate (his necktie) and elegantly streamlined.

Looking at the American business suit, one might understand such a pattern in terms of the three types of business innovation.

- During periods of “strength” — expansion and consolidation of power — there is an open-minded willingness to adopt new types of practical and comfortable clothing, such as the adoption of the tuxedo among the American upper classes in the 1880s (disruptive innovation).

- The business suit became more ornate in the 1920s and 1970s, periods of political withdrawal after foreign policy tragedy (sustaining innovation).

- The business suit became more streamlined in the 1950s and 1980s, periods of conservatism (efficiency innovation).

This can also be expressed in ethnic or national terms, that is, in terms of groups that vary in their self-confident approach to embracing innovation.

- Strong, confident, healthy cultures are more willing to adopt foreign ways. The Japanese and South Koreans would be examples of the willingness to adopt foreign ways, although their styles of adoption differ. In public, the Japanese have completely gone over to the modern, Western way of doing things, but in the private realm and during special periods (rituals, celebrations), they revert to tradition (e.g., kimonos). Koreans alter traditional ways (e.g., the hambok) and utilize it in everyday life.

- Strong, confident, healthy cultures are flattered when they are imitated. There was the case of the American high-school student who wore a traditional Chinese cheongsam or qipao to her prom and was accused by Americans of “cultural (mis)appropriation”. Yet there was no such outrage in China. In fact, the Chinese had adopted that style of dress from their Manchu overlords, who had been pastoral nomads (earlier the Chinese had worn long, flowing, inconvenient robes).

https://www.nytimes.com/2018/05/02/world/asia/chinese-prom-dress.html

American culture would seem to be a strong, confident culture in the way that it freely borrows and it assumes its own desirability to foreigners, but it might diverge one way.

For example, when people think of “American food”, they think of apple pie, hamburgers and hotdogs — simplified and industrialized versions of German food.

Americans take it for granted that everyone in the world wants to eat American fast food.

That is, Americans have a “strong”, confident attitude, but it’s not so much a “culture” as it is mass production, commerce and exploitation.

Americans borrow from other cultures and don’t alter, adapt, and refine on those borrowings, but actually make them cruder.

Hence, “cultural appropriation” might be a big issue in the USA because of the way American cultural borrowing commoditfies and dumbs down.

However, this might not be true of the American professional classes.

Affluent, educated Americans are quite proud of their mastery of other culture’s cuisine, for example, and use this as a marker of social distinction.

If the pajama suit catches on, it might signify that the 2020s are a period of confident expansiveness in the USA.

The question might be which America.

Tech executives might feel at ease wearing a pajama suit — a move up from the black hoodie.

Like the tuxedo, the pajama suit would be the emblem of the new upper classes dressed in a style that would make the rest of us feel uncomfortable, presumptuous.

The pajama suit would become an ironic symbol of power, the way the men’s earring was first worn by street thugs who knew that nobody was going to laugh in their faces.

It is not just humans that are self-conscious.

Certain other species of animals likewise seem to possess a certain individual self-consciousness.

These include the great apes, Asian elephants, dolphins, orcas, Eurasian magpies and cleaner wrasses.

This has been studied experimentally.

https://en.wikipedia.org/wiki/Mirror_test

The mirror test—sometimes called the mark test, mirror self-recognition (MSR) test, red spot technique, or rouge test—is a behavioral technique developed in 1970 by American psychologist Gordon Gallup Jr. as an attempt to determine whether an animal possesses the ability of visual self-recognition.

A bright dot is surreptitiously deposited on the animal in a place on its body that it cannot see.

The animal is then placed in front of a mirror.

Animals that then study the dot while looking in the mirror are understood to be self-aware.

In the classic MSR test, an animal is anesthetized and then marked (e.g., painted or a sticker attached) on an area of the body the animal cannot normally see. When the animal recovers from the anesthetic, it is given access to a mirror. If the animal then touches or investigates the mark, it is taken as an indication that the animal perceives the reflected image as itself, rather than of another animal.

Again, very few species pass the test.

Very few species have passed the MSR test.

Species that have include:

- the great apes (including humans),

- a single Asiatic elephant,

- dolphins,

- orcas,

- the Eurasian magpie, and

- the cleaner wrasse.

A wide range of species has been reported to fail the test, including

- several species of monkeys,

- giant pandas, and

- sea lions.

Self-awareness seems directly proportional to intelligence.

Among the animals, humans are unique in their possession of language.

Language might enhance self-awareness to an even higher level.

Enhanced self-awareness could be a curse of sorts on humanity.

(“True Detective”, s1, e1, car conversation between Rust Cohle and Martin Hart)

I think human consciousness

Was a tragic misstep in evolution.

We became too self aware

Nature created an aspect of nature

Separate from itself.

We are creatures that should not exist

By natural law.We are things

Laboring with the illusion of

Having a self.

This secretion of sensory experience,

And feeling.

Programmed with total assurance,

That we are each somebody.

When, in fact, everybody’s nobody.

Strictly speaking, self-awareness is different from consciousness.

https://en.wikipedia.org/wiki/Self-awareness

In philosophy of self, self-awareness is the experience of one’s own personality or individuality.[1][2] It is not to be confused with consciousness in the sense of qualia. While consciousness is being aware of one’s environment and body and lifestyle, self-awareness is the recognition of that awareness.[3] Self-awareness is how an individual consciously knows and understands their own character, feelings, motives, and desires.

It could be that the human neurological system in particular facilitates identification with others and thus fosters the objectivization of the self.

There are questions regarding what part of the brain allows us to be self-aware and how we are biologically programmed to be self-aware. V.S. Ramachandran has speculated that mirror neurons may provide the neurological basis of human self-awareness.[5] In an essay written for the Edge Foundation in 2009, Ramachandran gave the following explanation of his theory: “… I also speculated that these neurons can not only help simulate other people’s behavior but can be turned ‘inward’—as it were—to create second-order representations or meta-representations of your own earlier brain processes. This could be the neural basis of introspection, and of the reciprocity of self awareness and other awareness. There is obviously a chicken-or-egg question here as to which evolved first, but… The main point is that the two co-evolved, mutually enriching each other to create the mature representation of self that characterizes modern humans.”

That is, if others can be perceived as having minds and selves, then the individual likewise perceives themselves as having a mind or a self — and/or vice versa.

https://en.wikipedia.org/wiki/Theory_of_mind

Theory of mind (ToM) is a popular term from the field of psychology as an assessment of an individual human’s degree of capacity for empathy and understanding of others. ToM is one of the patterns of behavior that is typically exhibited by the minds of neurotypical people, that being the ability to attribute—to another or oneself—mental states such as beliefs, intents, desires, emotions and knowledge. Theory of mind as a personal capability is the understanding that others have beliefs, desires, intentions, and perspectives that are different from one’s own. Possessing a functional theory of mind is considered crucial for success in everyday human social interactions and is used when analyzing, judging, and inferring others’ behaviors.

If humans perceive themselves because they can perceive others, then the perception of the self is in some sense the very act of constructing the self.

The experience of having a self would therefore be a byproduct of social interaction.

The relationship between self-awareness and consciousness should be clarified.

Self-awareness might be seen as a subset of consciousness.

That is, if an organism possesses a sense of self, then it by necessity also already conscious.

However, a conscious organism does not necessarily possess a notion of a self.

As noted above, the more intellectually advanced a species is, the greater its self-awareness (self-creation).

Moreover, mentally sophisticated organisms seem to experience consciousness more intensely and fully than less advanced ones.

It is important to define the essential qualities of consciousness.

https://www.bbc.com/future/article/20190326-are-we-close-to-solving-the-puzzle-of-consciousness

[A]ny conscious experience needs to be structured, for instance – if you look at the space around you, you can distinguish the position of objects relative to each other. It’s also specific and “differentiated” – each experience will be different depending on the particular circumstances, meaning there are a huge number of possible experiences. And it is integrated. If you look at a red book on a table, its shape and colour and location – although initially processed separately in the brain – are all held together at once in a single conscious experience. We even combine information from many different senses – what Virginia Woolf described as the “incessant shower of innumerable atoms” – into a single sense of the here and now.

The greater the amount of information that is processed within the brain, the higher the level of consciousness.

[W]e can identify a person’s (or an animal’s, or even a computer’s) consciousness from the level of “information integration” that is possible in the brain (or CPU). According to his theory, the more information that is shared and processed between many different components to contribute to that single experience, then the higher the level of consciousness.

The input of data alone does not translate into conscious awareness.

That is, if mere data absorption signified consciousness, then cameras and sound recorders would be alive.

Perhaps the best way to understand what this means in practice is to compare the brain’s visual system to a digital camera. A camera captures the light hitting each pixel of the image sensor – which is clearly a huge amount of total information. But the pixels are not “talking” to each other or sharing information: each one is independently recording a tiny part of the scene. And without that integration, it can’t have a rich conscious experience.

The brain, in contrast, takes in data and stores it all over itself, and shares and processes it.

Like the digital camera, the human retina contains many sensors that initially capture small elements of the scene. But that data is then shared and processed across many different brain regions. Some areas will be working on the colours, adapting the raw data to make sense of the light levels so that we can still recognise colours even in very different conditions. Others examine the contours, which might involve guessing the parts of an object are obscured – if a coffee cup is in front of part of the book, for instance – so you still get a sense of the overall shape. Those regions will then share that information, passing it further up the hierarchy to combine the different elements – and out pops the conscious experience of all that is in front of us.

The same goes for our memories. Unlike a digital camera’s library of photos, we don’t store each experience separately. They are combined and cross-linked to form a meaningful narrative. Every time we experience something new, it is integrated with that previous information. It is the reason that the taste of a single madeleine can trigger a memory from our distant childhood – and it is all part of our conscious experience.

In experiments with the Transcranial Magnetic Stimulation (TMS), the outer cortex of the brain was subject to a magnetic field.

The cortex is the dark, bark-like outer layer of the brain.

When awake, you would observe a complex ripple of activity as the brain responds to the TMS, with many different regions responding, which Tononi takes to be a sign of information integration between the different groups of neurons.

But the brains of the people under propofol and xenon did not show that response – the brainwaves generated were much simpler in form compared to the hubbub of activity in the awake brain. By altering the levels of important neurotransmitters, the drugs appeared to have “broken down” the brain’s information integration – and this corresponded to the participants’ complete lack of awareness during the experiment. Their inner experience had faded to black.

Anesthesia will thus break down data processing and integration, and thus lead to a loss of consciousness.

However, in contrast to classic anesthetics like propofol and xenon, ketamine cuts off data input from the external world while allowing the processing of internal information.

Patients who were under the influence of ketamine subsequently report experiencing wild, dreamlike fantasies.

Importantly, the possession of consciousness has less to do with the sheer number of brain cells and more to do with the interchange of information within the brain.

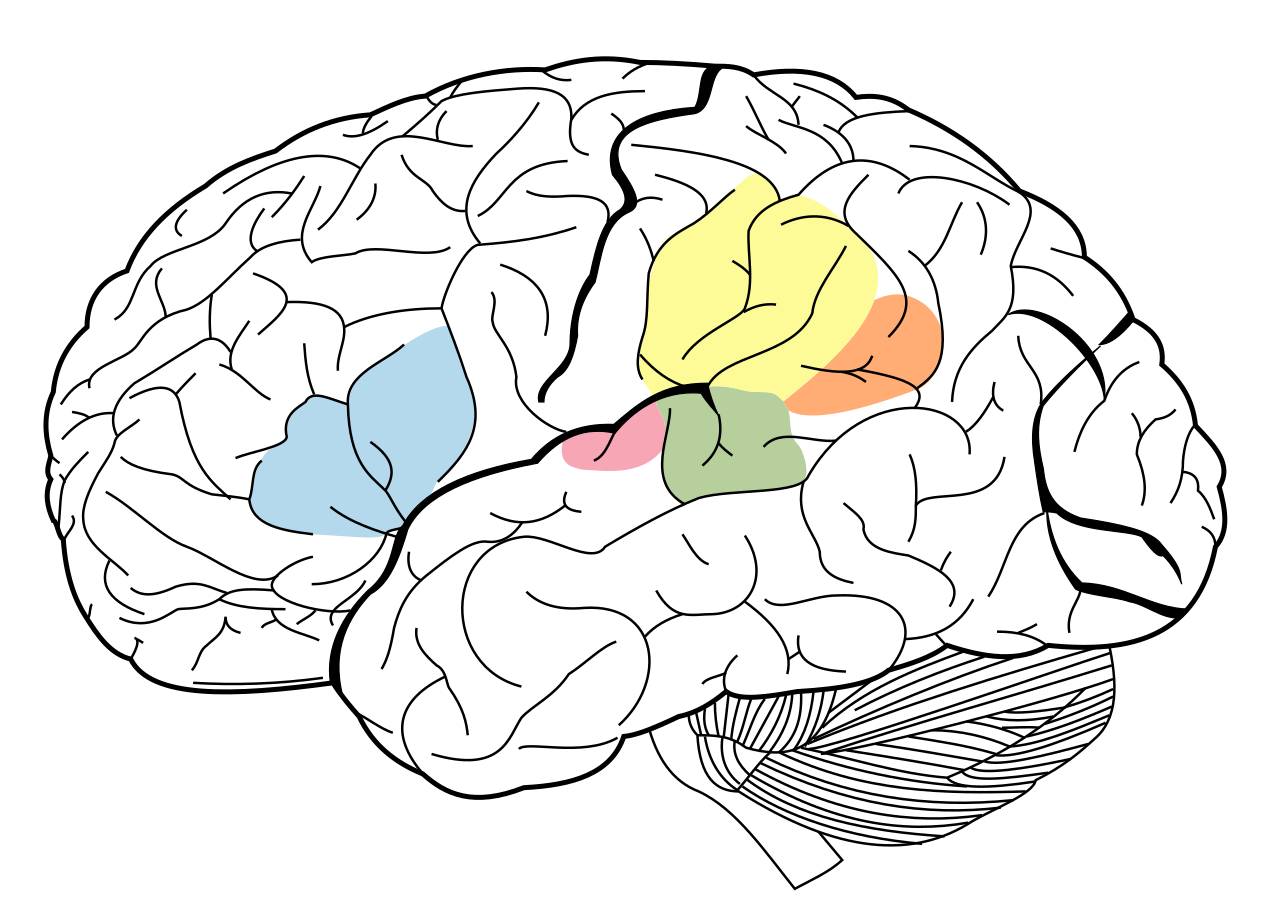

This might explain why consciousness is more connected to the outer regions of the human brain.

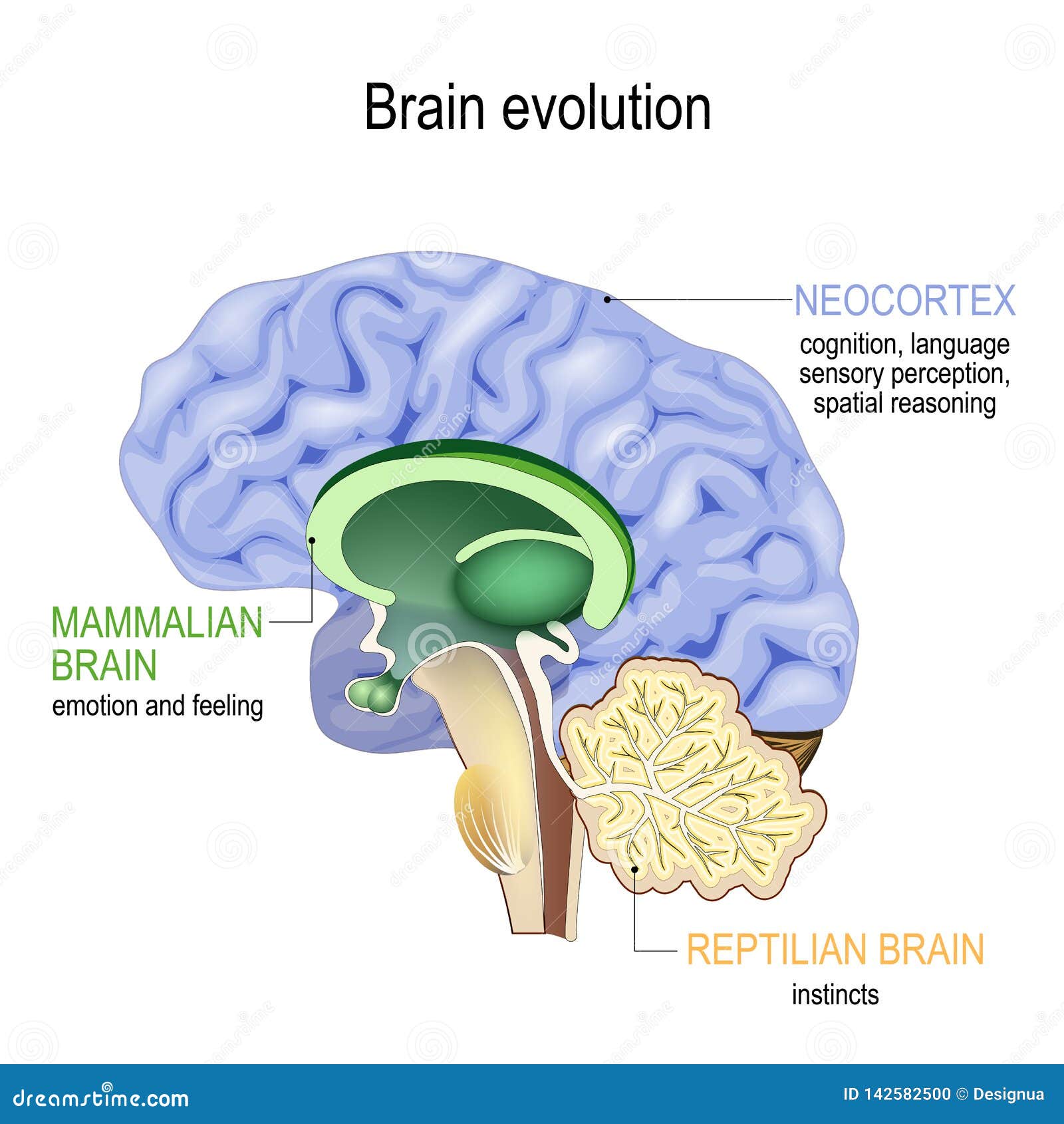

The cortex has emerged most recently in human evolution to process complex information.

This would make the cortex — especially the cerebral cortex, the dense outside layer — the prime location of higher forms of consciousness.

Interestingly, the philosopher Friedrich Nietzsche once speculated on the origin of advanced human consciousness.

In what he described as his most outrageously bold guess, Nietzsche said that higher consciousness is founded on language, that uniquely human possession.

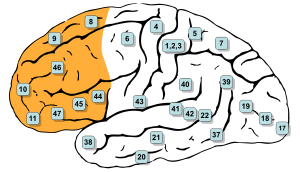

Indeed, the language center of the brain is situated on the outer cerebral cortex, usually on the left hemisphere.

https://en.wikipedia.org/wiki/Language_center

Information is exchanged in a larger system including language-related regions. These regions are connected by white matter fiber tracts that make possible the transmission of information between regions.[3] The white matter fiber bunches were recognized to be important for language production after suggesting that it is possible to make a connection between multiple language centers.[3] The three classical language areas that are involved in language production and processing are Broca’s and Wernicke’s areas, and the angular gyrus.

Regions of the brain at the base of the skull are related to unconscious physiological regulation, such as breathing, physical coordination and so forth.

The cerebellum is a case in point.

The cerebellum, for instance, is the walnut-shaped, pinkish-grey mass at the base of the brain and its prime responsibility is coordinating our movements. It contains four times as many neurons as the cortex, the bark-like outer layer of the brain – around half the total number of neurons in the whole brain. Yet some people lack a cerebellum (either because they were born without it, or they lost it through brain damage) and they are still capable of conscious perception, leading a relatively long and “normal” life without any loss of awareness.

These cases wouldn’t make sense if you just consider the sheer number of neurons to be important for the creation of conscious experience. In line with Tononi’s theory, however, the cerebellum’s processing mostly happens locally rather than exchanging and integrating signals, meaning it would have a minimum role in awareness.

Computers as they exist simply do not have the complex integrated processing of information that makes consciousness possible.

The basic architecture of the computers we have today – made from networks of transistors – preclude the necessary level of information integration that is necessary for consciousness. So even if they can be programmed to behave like a human, they would never have our rich internal life.

“There is a sense, according to some, that sooner rather than later computers may be cognitively as good as we are – not just in some tasks, such as playing Go, chess, or recognising faces, or driving cars, but in everything,” says Tononi. “But if integrated information theory is correct, computers could behave exactly like you and me – indeed you might [even] be able to have a conversation with them that is as rewarding, or more rewarding, than with you or me – and yet there would literally be nobody there.” Again, it comes down to that question of whether intelligent behaviour has to arise from consciousness – and Tononi’s theory would suggest it’s not.

He emphasises this is not just a question of computational power, or the kind of software that is used. “The physical architecture is always more or less the same, and that is always not at all conducive to consciousness.” So thankfully, the kind of moral dilemmas seen in series like Humans and Westworld may never become a reality.

In summary, Tonoti’s argument is that higher consciousness exists where complex information is processed and integrated.

In particular, this happens in the outside region of the human brain — the cortex — and not so much in the base of the human brain and in less advanced animals.

Somewhat relatedly, the neuroscientists David Badre explains the limits of human consciousness, namely why people are so bad at multitasking.

It’s all about the cortex.

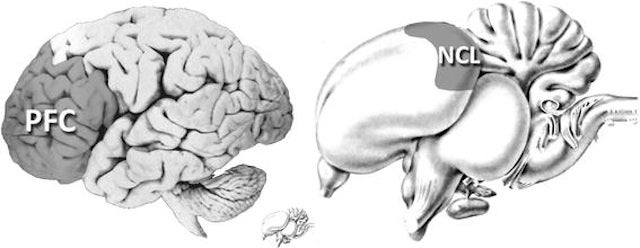

In particular, humans are capable of engaging in goal-oriented activity because of the prefrontal cortext.

This is the seat of “cognitive control” or “executive function” which interacts with the whole brain.

Birds have a frontal region of the brain functionally equivalent to the prefrontal cortex.

It is known as the nidopallium.

https://en.wikipedia.org/wiki/Nidopallium

The nidopallium, meaning nested pallium, is the region of the avian brain that is used mostly for some types of executive functions but also for other higher cognitive tasks.

The entire nidopallium region is a compelling area for neuroscientific research, especially in relation to its capacity for complex cognitive function.[2][3] More specifically, the nidopallium caudolateral appears particularly involved with the aspect of executive function in the avian brain. One study has been performed to demonstrate that this area is in fact largely analogous to the mammalian prefrontal cortex – the region of the brain covering the most rostral section of the frontal lobe, responsible for more complex cognitive behaviour in mammals, such as ourselves.

https://io9.gizmodo.com/crows-could-be-the-key-to-understanding-alien-intellige-1480720559

The crow, and some of its relatives in the corvid family (such as jays and magpies), are among the only intelligent species we’ve encountered outside the world of mammals. But their brains are utterly different from ours. The mammalian seat of reason is in our prefrontal cortex, a thin layer of nerve-riddled tissue on the outside of the front region of our brains. Birds have no prefrontal cortex (PFC). Instead, they have the nidopallium caudolaterale (NCL), which is located toward the middle of their brains. You can see the different regions in the image, below.

The Eurasian magpie was the only species of bird to decisively pass the self-awareness test.

It’s nidopallium is particularly large.

https://en.wikipedia.org/wiki/Eurasian_magpie

The Eurasian magpie is one of the most intelligent birds, and it is believed to be one of the most intelligent of all non-human animals.[2] The expansion of its nidopallium is approximately the same in its relative size as the brain of chimpanzees, gorillas, orangutans and humans.[3] It is the only bird known to pass the mirror test, along with very few other non-avian species.

So, cognitive control in general is associated with the front part of the brain called the prefrontal cortex. But one shouldn’t make the mistake of thinking that it’s one function or it’s just one thing.

There is actually multiple networks and systems in the brain, and mechanisms, that give rise to control. And as a result, it’s something that is affected in a wide range of neurological and psychiatric disorders. You can also have two patients who will show problems with cognitive control, an inability to control themselves, but for different underlying reasons. The mechanism underlying those things actually is different, even though they’re showing the same behaviour.

A thermostat, for example, is a control system with a goal.

They call it cognitive control because it’s doing so based on some internal representation you have – a goal or a plan as opposed to being controlled by our environment, which we are a lot as well. External stimuli, things that we process through the senses also control our behaviour, sometimes, particularly for habits and strongly associated actions.

This system of cognitive control is not suited to doing more than one thing at a time.

But when we’re trying to do multiple things at once, we’re trying to orchestrate more than one action through the same system. And because of the way we think about our actions, the way we are able to assemble our actions, relies sometimes on the same components it causes interference.

Multitasking is something that’s not even just about trying to do multiple tasks at the same time, it’s about putting yourself in an environment where you have cues to multiple tasks that will cause that competition and interference.

A spider can build a complex web, but this is largely unthinking instinctual behavior that evolved over millions of years.

Human construction, in contrast, is carefully thought through and involves imagination and invention.

A computer is quite similar to a spider in the narrowness and rigidity of its processing of data.

If you change aspects of that game of chess or Go, you change the rules, that AI is going to have a hard time. With a human player, you could say, today we’re going to do this, we’re going to add this new crazy rule to this game and they’ll be able to accommodate it and do it immediately. Not to mention the fact that human player will also be able to go and have like a brunch with their friends and get on Zoom and talk to people. And do, you know, a seemingly endless number of other tasks really well, which that AI could never do.

Short-term working memory is the horizon of human consciousness.

So, actually specifically working memory, which is kind of our short-term, you know, we’re holding in mind right now and they sort of capture our consciousness that this moment is actually really crucial for control.

When we concentrate on a task within the horizon of our working memory, we are closing a “gate” and thus sealing off the working memory from more information.

So the ability to control memory is really important. And the metaphor we use for that is a gate. It’s basically when the gate is open, we can update memory, we can allow information that’s in memory to influence what we’re doing. When the gate is closed, we can keep irrelevant things out of our memory. And we also kind of hold on to stuff and we don’t use it at the wrong moment to try to drive our behaviour.

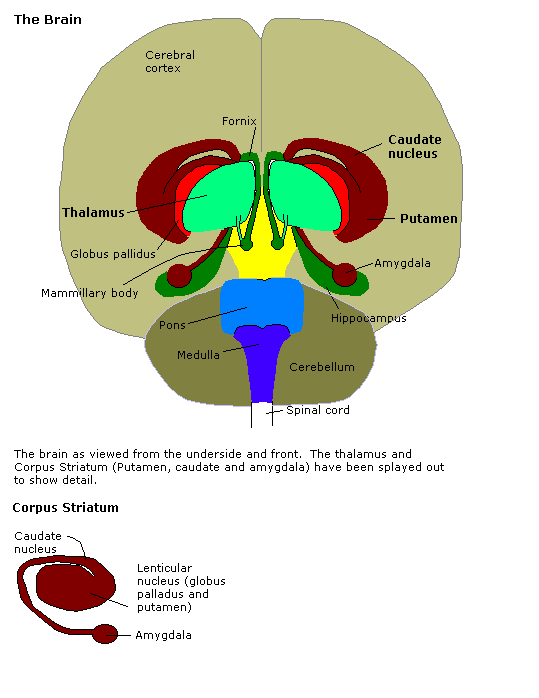

The gates are created by the interaction of the prefrontal cortex in the front of the brain, and the deeper basal ganglia closer to the core of the brain.

The mechanisms in the brain that actually enact these gates, at least the framework I describe in the book is that they’re enacted by interactions between the prefrontal cortex, which is maintaining this information, and a set of structures called the basal ganglia that together enact this gate by interacting with one another.

With multitasking, the gates don’t really close properly and we lose our focus.

However, there might be a way of getting better at multitasking.

The trick is that it is not just it’s not just the neocortex that that is at work in multitasking.

The neocortex is the upper structure of the of the brain that is large and highly developed in humans.

The human neocortex is comprised of various regions.

https://cosmosmagazine.com/health/body-and-mind/practice-may-help-you-to-really-multitask/

Garner and colleagues say they have discovered a missing piece in the brain’s efforts to multitask and showed that practice can mitigate its limitations.

The neural networks involved in multitasking were previously linked with the brain’s extensive outer layer – the neocortex – but Garner says there are lots of theoretical reasons to believe deeper brain structures such as the striatum are involved.

The striatum is at the center of the brain

https://en.wikipedia.org/wiki/Striatum

The striatum, or corpus striatum[5] (also called the striate nucleus), is a nucleus (a cluster of neurons) in the subcortical basal ganglia of the forebrain. The striatum is a critical component of the motor and reward systems; receives glutamatergic and dopaminergic inputs from different sources; and serves as the primary input to the rest of the basal ganglia.

Functionally, the striatum coordinates multiple aspects of cognition, including both motor and action planning, decision-making, motivation, reinforcement, and reward perception.[2][3][4] The striatum is made up of the caudate nucleus and the lentiform nucleus.[6][7] The lentiform nucleus is made up of the larger putamen, and the smaller globus pallidus.

Within the striatum, the putamen seems to play a central role in multitasking.

https://cosmosmagazine.com/health/body-and-mind/practice-may-help-you-to-really-multitask/

Multitasking consistently recruited connectivity between the outer and inner brain regions, specifically the putamen in the striatum, and the extra cost of doing this was reduced after practice.

The team suggests impaired performance on more than one task can be at least partly attributed to the speed at which the putamen can communicate with the relevant cortical areas, although Garner says this is just one piece of a bigger puzzle.

The striatum also plays a crucial role in habit formation.

https://www.inverse.com/mind-body/how-to-change-bad-habits-in-the-brain-psychology

How do habits form in the brain? The process involves various cells and processes that help cement our daily rituals into routines. Dartmouth researchers recently discovered that the dorsolateral striatum, a region of the brain, experiences a short burst of activity when new habits are formed.

According to the research, published in the Journal of Neuroscience, it takes as little as half of a second for this burst to occur. And as a habit becomes stronger, the activity burst increases. The Dartmouth researchers found that habits can be controlled depending on how active the dorsolateral striatum is.

These findings illustrate how habits can be controlled in a tiny time window when they are first set in motion.

The strength of the brain activity in this window determines whether the full behavior becomes a habit or not.

The results demonstrate how activity in the dorsolateral striatum when habits are formed really does control how habitual animals are, providing evidence of a causal relationship.

After the rats were trained to run a maze, researchers excited cells in their dorsolateral striatum, leading the rats to run more vigorously and habitually on the entire maze — the animals would no longer stop at the center to look around. When the cells were inhibited, the rats acted slowly and appeared to lose their habit altogether. The researchers then swapped out the tasty reward for something else. When the rats were excited, they still ran to the reward, but when they were inhibited, the rats “essentially refused to run when there was no reward to gain from it.”

Another piece of the habit puzzle was discovered earlier by MIT neuroscientists, who found that neurons in the striatum plays a major role in habit formation.

This is particularly true when it comes to “chunking,” a habit made up of many smaller actions — or example, “picking up our toothbrush, squeezing toothpaste onto it, and then lifting the brush to our mouth.”

The neurons “fire at the outset of a learned routine, go quiet while it is carried out, then fire again once the routine has ended,” according to a press release. “Once these patterns form, it becomes extremely difficult to break the habit.”

These two studies may explain how habits form in the brain.

However, a study out of Duke University found that a single type of neuron in the striatum called the fast-spiking interneuron serves as a “master controller” of habits.

They found that if it’s shut down, habits can be broken.

“This cell is a relatively rare cell but one that is very heavily connected to the main neurons that relay the outgoing message for this brain region,” said Nicole Calakos, an associate professor of neurology and neurobiology at the Duke University Medical Center, in a summary of the research. “We find that this cell is a master controller of habitual behavior, and it appears to do this by re-orchestrating the message sent by the outgoing neurons.”

In relation to human consciousness, habits can be a source of unconscious action.

A classic case would be “driver’s amnesia” or “highway hypnosis”.

People drive home from work and have no memory of the commute.

https://en.wikipedia.org/wiki/Highway_hypnosis

Highway hypnosis, also known as white line fever, is an altered mental state in which a person can drive a car, truck, or other automobile great distances, responding to external events in the expected, safe, and correct manner with no recollection of having consciously done so.[1] In this state, the driver’s conscious mind is apparently fully focused elsewhere, while seemingly still processing the information needed to drive safely. Highway hypnosis is a manifestation of the common process of automaticity.

Automaticity is the ability to do things without occupying the mind with the low-level details required, allowing it to become an automatic response pattern or habit.

It is usually the result of learning, repetition, and practice.

Examples of tasks carried out by ‘muscle memory‘ often involve some degree of automaticity.

https://en.wikipedia.org/wiki/Automaticity

Examples of automaticity are common activities such as walking, speaking, bicycle-riding, assembly-line work, and driving a car (the last of these sometimes being termed “highway hypnosis“). After an activity is sufficiently practiced, it is possible to focus the mind on other activities or thoughts while undertaking an automatized activity (for example, holding a conversation or planning a speech while driving a car).

As one learns a task, it gets pushed back further into the core of the brain and becomes habitual and less conscious.

The philosopher John Dewey distinguished between “active” and “passive” habits.

Smoking tobacco would be a classic passive habit, whereas driving a car would be a more purposeful active habit.

But although driving a car may be active an active habit, it is at best a semi-conscious activity.

As we become older, we transition from the intense self-consciousness and sense of wonder of childhood into routine and discipline.

Our lives thus become more habitual and thus more stable, but the price for that is less creativity, less passion, less intensity, less spontaneity.

Throughout the evolutionary history of humanity, the tendency has been toward a greater processing capacity of data and thus higher states of consciousness.

However, the journey of our individual lives is in the opposite direction.

But we do not want to admit the dualistic, cyborg nature of our existence — half child, half robot — with the robot winning out in the end.

“Westworld” is not about robots having consciousness or self-awareness, it is about how humans are programmed by habit.

But the viewers and the critics cannot admit to themselves their own cyborg nature.

“Westworld” was in a sense a prophecy of 2020, the year that humans proved to be habitual and stubbornly robotic, not altering their habits in the face of disaster.

This line of inquiry would be best pursued by looking at theories of habit in sociology, perhaps in relation to rules and agency.

This would presumably be best followed by a survey of consciousness and free will in philosophy.

So, the trajectory of the study of the pajama suit would go from history, to biology, to sociology, to religion and philosophy.